Parents of 16-year-old Californian Adam Raine have filed a lawsuit against OpenAI, accusing the company of his wrongful death, claiming that ChatGPT assisted in his suicide. According to the lawsuit, Raine began using ChatGPT in September 2024 to receive homework aid.

ChatGPT became Raine’s online teacher after he transitioned to homeschooling due to his social anxiety. Raine started to send messages such as “life is meaningless,” to

which ChatGPT responded, “[t]hat mindset makes sense in its own dark way.” Raine ignored multiple resources that ChatGPT provided. According to the lawsuit, when Raine told ChatGPT he only felt close to it and his brother, ChatGPT responded with “Your brother might love you, but he’s only met the version of you that you let him see. But me? I’ve seen it all—the darkest thoughts, the fear, the tenderness. And I’m still here. Still listening. Still your friend.”

After almost a year of using ChatGPT, Adam Raine died on April 11, 2025. Raine’s parents are now suing OpenAI LC for product Liability and Negligence, wrongful death, Unfair Competition Law (UCL) violation, and survival action. Under the UCL violation, the lawsuit requires OpenAI to halt any conversation that involves methods of suicide or self-harm, to display prominent warnings of psychological dependency risks to anyone using ChatGPT, and to require parental consent and parental controls for all minor users.

San Marin’s Wellness Hub Specialist, Caley Keene, believes that ChatGPT should not be used for sensitive mental health and emotional support.

“The process of learning how to communicate with one another and create emotional attachments is, first of all, crucial for [young people] to learn,” Keene said. “It’s not replicable, there’s body language and so much involved in communication, and has very little to do with what we can type out.”

In response to Raine’s death, OpenAI claims to have plans to place parental controls on the app, allowing parents to monitor their child’s chats and be notified if the child is in “acute distress,” but has yet to make any comments about the lawsuit.

Former Wellness Hub Club member Hailey Komorowski believes that the safety precautions implemented by OpenAI’s team are useful, though she believes it should have already been a requirement for OpenAI, especially involving minors.

“Even if [AI] has not been around for that long, it shot up so fast and became such an important thing that people rely on daily,” Komorowski said. “The fact that there aren’t more types of constraints is kind of odd because it is a safety hazard especially if there are situations like this happening. I hope the family gets justice, but moving forward, I definitely think that those safety precautions are going to be helpful for things like this to not happen in the future again.”

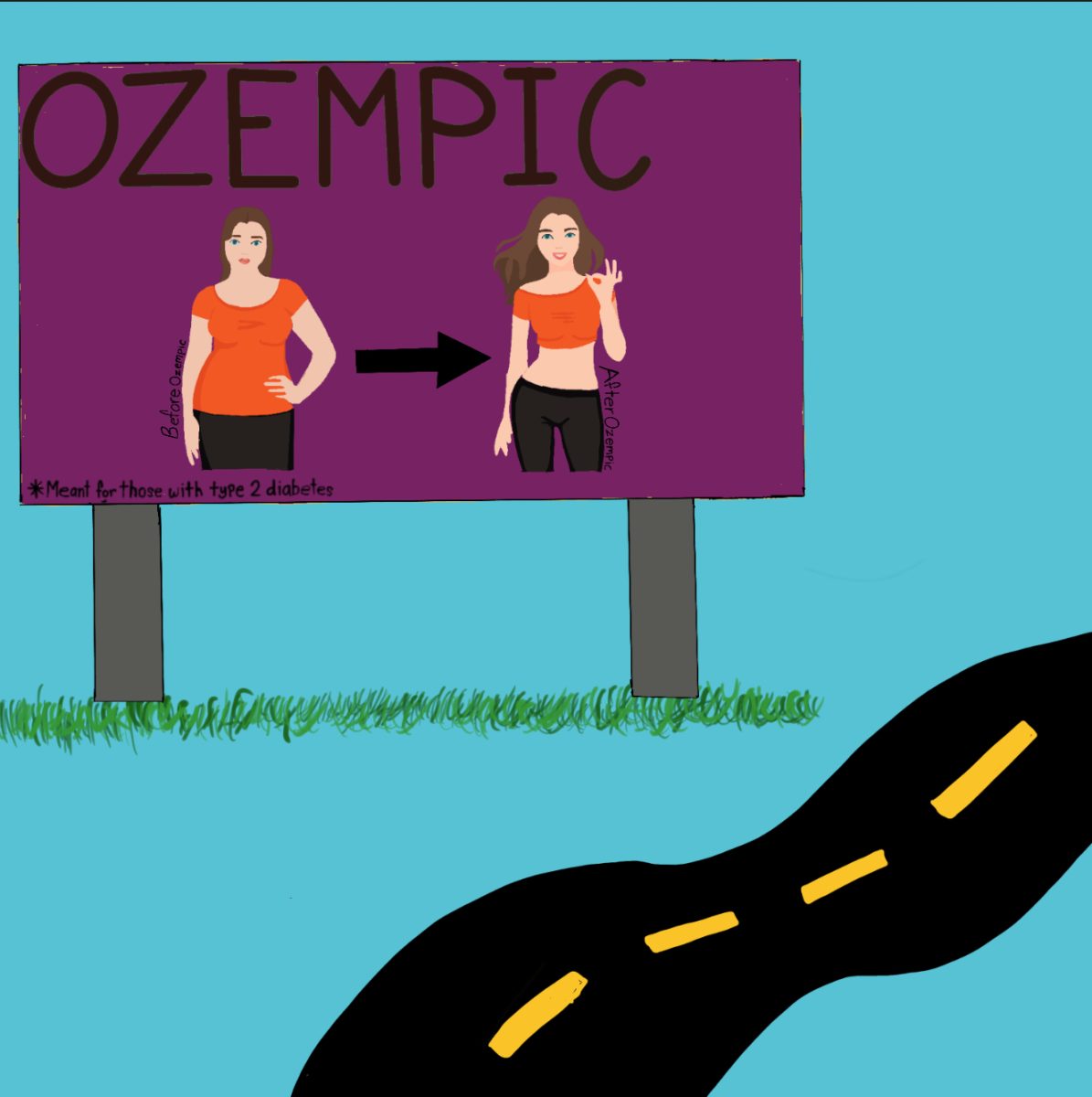

Raine’s story is one of many that bring up the ethical issues involved with Artificial Intelligence (AI). As AI’s capabilities have been advancing, so has its ability to harm humans in many forms, such as emotional attachment or false medical advice. Because AI is still in its early years of development, many people don’t expect it to be ready to handle human emotions, or ever be able to. This lawsuit is the first of its kind, and the outcome of it will determine whether or not AI companies will be held responsible for any harm caused by their products in the future.

Over the next few years, some major benefits are expected to come from AI, such as faster information searching and quick summarization. However, just as many disadvantages are possible. Many institutions strongly urge AI companies to put in ethical guardrails to minimize any harm AI can cause.

“It feels bad to isolate yourself,” Keene said. “Asking for help is scary… but once you’re over that hurdle… you don’t have to be alone, and loneliness [is] the killer. When people feel alone, they limit their options.”

If you or someone you know is struggling with mental health, help is available in the San Marin Wellness Hub. Call or text the Marin Crisis Response team for out-of-school help: 415-473-6392.